- 3.1 解压GI的安装包

- 3.2 安装配置Xmanager软件

- 3.3 共享存储LUN的赋权

- 3.4 使用Xmanager图形化界面配置GI

- 3.5 验证crsctl的状态

- 3.6 测试集群的FAILED OVER功能

Linux平台 Oracle 19c RAC安装指导:

Part1:Linux平台 Oracle 19c RAC安装Part1:准备工作

Part2:Linux平台 Oracle 19c RAC安装Part2:GI配置

Part3:Linux平台 Oracle 19c RAC安装Part3:DB配置

本文安装环境:OEL 7.6 + Oracle 19.3 GI & RAC

三、GI(Grid Infrastructure)安装

3.1 解压GI的安装包

su – grid

解压 GRID 到 GRID用户的$ORACLE_HOME下

[grid@db193 grid]$ pwd

/u01/app/19.3.0/grid

[grid@db193 grid]$ unzip /u01/media/LINUX.X64_193000_grid_home.zip

3.2 安装配置Xmanager软件

在自己的Windows系统上成功安装Xmanager Enterprise之后,运行Xstart.exe可执行程序,

配置如下

Session:db193

Host:192.168.1.193

Protocol:SSH

User Name:grid

Execution Command:/usr/bin/xterm -ls -display $DISPLAY

点击RUN,输入grid用户的密码可以正常弹出命令窗口界面,即配置成功。

当然也可以通过开启Xmanager – Passive,直接在SecureCRT连接的会话窗口中临时配置DISPLAY变量直接调用图形:

export DISPLAY=192.168.1.31:0.0

3.3 共享存储LUN的赋权

在《Linux平台 Oracle 19c RAC安装Part1:准备工作 -> 1.3 共享存储规划》中已完成绑定和权限,这里不需要再次操作。

--只需确认两个节点链接文件(/dev/asm*)对应的sd[b-g]2的权限正确即可:

# ls -l /dev/sd?2

brw-rw---- 1 root disk 8, 2 Jul 31 09:13 /dev/sda2

brw-rw---- 1 grid asmadmin 8, 18 Jul 31 18:06 /dev/sdb2

brw-rw---- 1 grid asmadmin 8, 34 Jul 31 18:06 /dev/sdc2

brw-rw---- 1 grid asmadmin 8, 50 Jul 31 18:06 /dev/sdd2

brw-rw---- 1 grid asmadmin 8, 66 Jul 31 17:10 /dev/sde2

brw-rw---- 1 grid asmadmin 8, 82 Jul 31 17:10 /dev/sdf2

brw-rw---- 1 grid asmadmin 8, 98 Jul 31 17:10 /dev/sdg2

3.4 使用Xmanager图形化界面配置GI

Xmanager通过grid用户登录,进入$ORACLE_HOME目录,运行gridSetup配置GI

$ cd $ORACLE_HOME

$ ./gridSetup.sh

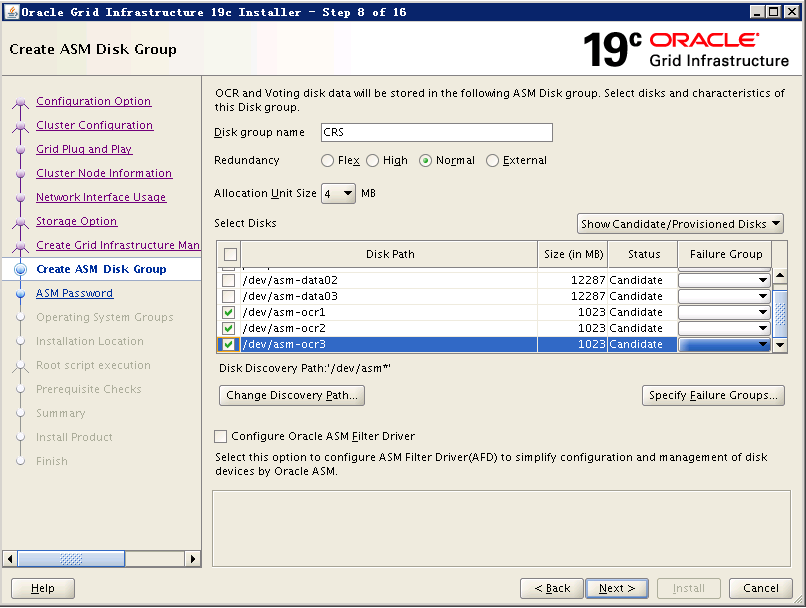

其实从12cR2开始,GI的配置就跟之前有一些变化,19c也一样,下面来看下GI配置的整个图形化安装的过程截图:

注:这里Public网卡这里用的enp0s3,ASM&Private这里用的enp0s8。

注:这里有一个新的存储GIMR的,之前12c、18c版本都是选择是外部冗余的一个40G大小的盘(当初给很多刚接触安装12cRAC的DBA造成了不适),而在19c安装中可以看到默认就是不选择的,恢复了11g时代的清爽感,这点我个人觉得很赞。

注:这里跟之前区别不大,我依然是选择3块1G的盘,Normal冗余作为OCR和voting disk。

注:这里检查出来的问题都需要认真核对,确认确实可以忽略才可以点击“Ignore All”,如果这里检测出缺少某些RPM包,需要使用yum安装好。我这里是自己的测试环境,分的配置较低,所以有内存和swap检测不通过,实际生产环境不应出现。

注:执行root脚本,确保先在一节点执行完毕后,再在其他节点执行。

第一个节点root执行脚本:

[root@db193 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@db193 ~]# /u01/app/19.3.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.3.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.3.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/db193/crsconfig/rootcrs_db193_2019-07-31_07-27-23AM.log

2019/07/31 07:28:07 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/07/31 07:28:08 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/07/31 07:28:08 CLSRSC-363: User ignored prerequisites during installation

2019/07/31 07:28:08 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/07/31 07:28:17 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/07/31 07:28:21 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2019/07/31 07:28:21 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2019/07/31 07:28:21 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2019/07/31 07:31:54 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2019/07/31 07:32:24 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/07/31 07:32:37 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/07/31 07:33:17 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/07/31 07:33:18 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/07/31 07:33:42 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/07/31 07:33:43 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/07/31 07:34:48 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/07/31 07:35:21 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2019/07/31 07:35:50 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/07/31 07:36:14 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

ASM has been created and started successfully.

[DBT-30001] Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-190731AM073727.log for details.

2019/07/31 07:40:00 CLSRSC-482: Running command: '/u01/app/19.3.0/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-4256: Updating the profile

Successful addition of voting disk b789e47e76d74f06bf5f8b5cb4d62b88.

Successful addition of voting disk 3bc8119dfafe4fbebf7e1bf11aec8b9a.

Successful addition of voting disk bccdf28694a54ffcbf41354c7e4f133d.

Successfully replaced voting disk group with +CRS.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE b789e47e76d74f06bf5f8b5cb4d62b88 (/dev/asm-ocr1) [CRS]

2. ONLINE 3bc8119dfafe4fbebf7e1bf11aec8b9a (/dev/asm-ocr2) [CRS]

3. ONLINE bccdf28694a54ffcbf41354c7e4f133d (/dev/asm-ocr3) [CRS]

Located 3 voting disk(s).

2019/07/31 07:48:20 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2019/07/31 07:50:48 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/07/31 07:50:49 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2019/07/31 08:04:16 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/07/31 08:08:26 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@db193 ~]#

执行成功后,在第二个节点root执行脚本:

[root@db195 ~]# /u01/app/oraInventory/orainstRoot.sh

Changing permissions of /u01/app/oraInventory.

Adding read,write permissions for group.

Removing read,write,execute permissions for world.

Changing groupname of /u01/app/oraInventory to oinstall.

The execution of the script is complete.

[root@db195 ~]# /u01/app/19.3.0/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/19.3.0/grid

Enter the full pathname of the local bin directory: [/usr/local/bin]:

Copying dbhome to /usr/local/bin ...

Copying oraenv to /usr/local/bin ...

Copying coraenv to /usr/local/bin ...

Creating /etc/oratab file...

Entries will be added to the /etc/oratab file as needed by

Database Configuration Assistant when a database is created

Finished running generic part of root script.

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/19.3.0/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/db195/crsconfig/rootcrs_db195_2019-07-31_08-10-55AM.log

2019/07/31 08:11:34 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2019/07/31 08:11:34 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2019/07/31 08:11:34 CLSRSC-363: User ignored prerequisites during installation

2019/07/31 08:11:34 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2019/07/31 08:11:44 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2019/07/31 08:11:44 CLSRSC-594: Executing installation step 5 of 19: 'SetupOSD'.

2019/07/31 08:11:45 CLSRSC-594: Executing installation step 6 of 19: 'CheckCRSConfig'.

2019/07/31 08:11:51 CLSRSC-594: Executing installation step 7 of 19: 'SetupLocalGPNP'.

2019/07/31 08:12:03 CLSRSC-594: Executing installation step 8 of 19: 'CreateRootCert'.

2019/07/31 08:12:04 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2019/07/31 08:12:32 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2019/07/31 08:12:33 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2019/07/31 08:12:56 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2019/07/31 08:12:59 CLSRSC-330: Adding Clusterware entries to file 'oracle-ohasd.service'

2019/07/31 08:13:59 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2019/07/31 08:14:31 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2019/07/31 08:14:42 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2019/07/31 08:14:51 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2019/07/31 08:14:58 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

2019/07/31 08:15:32 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

2019/07/31 08:17:41 CLSRSC-343: Successfully started Oracle Clusterware stack

2019/07/31 08:17:42 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2019/07/31 08:22:03 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2019/07/31 08:23:05 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

[root@db195 ~]#

这段时间从打印的日志来看,虽然耗时也比以前11g时代长,但实际相比12c的上一个版本18c来说却缩短了不少。

root脚本成功执行完后继续安装:

注:最后这个报错提示,查看日志发现是因为使用了一个scan ip的提示,可以忽略。

至此GI配置完成。

3.5 验证crsctl的状态

crsctl stat res -t查看集群资源状态信息,看到19c实际相对18c来说,有做很多的精简,更趋于稳定性因素考虑~

[grid@db193 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE db193 STABLE

ONLINE ONLINE db195 STABLE

ora.chad

ONLINE ONLINE db193 STABLE

ONLINE ONLINE db195 STABLE

ora.net1.network

ONLINE ONLINE db193 STABLE

ONLINE ONLINE db195 STABLE

ora.ons

ONLINE ONLINE db193 STABLE

ONLINE ONLINE db195 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.CRS.dg(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE db193 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE db193 Started,STABLE

2 ONLINE ONLINE db195 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE db193 STABLE

ora.db193.vip

1 ONLINE ONLINE db193 STABLE

ora.db195.vip

1 ONLINE ONLINE db195 STABLE

ora.qosmserver

1 ONLINE ONLINE db193 STABLE

ora.scan1.vip

1 ONLINE ONLINE db193 STABLE

--------------------------------------------------------------------------------

crsctl stat res -t -init

[grid@db193 ~]$ crsctl stat res -t -init

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.asm

1 ONLINE ONLINE db193 Started,STABLE

ora.cluster_interconnect.haip

1 ONLINE ONLINE db193 STABLE

ora.crf

1 ONLINE ONLINE db193 STABLE

ora.crsd

1 ONLINE ONLINE db193 STABLE

ora.cssd

1 ONLINE ONLINE db193 STABLE

ora.cssdmonitor

1 ONLINE ONLINE db193 STABLE

ora.ctssd

1 ONLINE ONLINE db193 ACTIVE:0,STABLE

ora.diskmon

1 OFFLINE OFFLINE STABLE

ora.evmd

1 ONLINE ONLINE db193 STABLE

ora.gipcd

1 ONLINE ONLINE db193 STABLE

ora.gpnpd

1 ONLINE ONLINE db193 STABLE

ora.mdnsd

1 ONLINE ONLINE db193 STABLE

ora.storage

1 ONLINE ONLINE db193 STABLE

--------------------------------------------------------------------------------

3.6 测试集群的FAILED OVER功能

节点2被重启,查看节点1状态:

[grid@db193 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE db193 STABLE

ora.chad

ONLINE ONLINE db193 STABLE

ora.net1.network

ONLINE ONLINE db193 STABLE

ora.ons

ONLINE ONLINE db193 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.CRS.dg(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE db193 STABLE

ora.asm(ora.asmgroup)

1 ONLINE ONLINE db193 Started,STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE ONLINE db193 STABLE

2 ONLINE OFFLINE STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE db193 STABLE

ora.db193.vip

1 ONLINE ONLINE db193 STABLE

ora.db195.vip

1 ONLINE INTERMEDIATE db193 FAILED OVER,STABLE

ora.qosmserver

1 ONLINE ONLINE db193 STABLE

ora.scan1.vip

1 ONLINE ONLINE db193 STABLE

--------------------------------------------------------------------------------

节点1被重启,查看节点2状态:

[grid@db195 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

Name Target State Server State details

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.LISTENER.lsnr

ONLINE ONLINE db195 STABLE

ora.chad

ONLINE ONLINE db195 STABLE

ora.net1.network

ONLINE ONLINE db195 STABLE

ora.ons

ONLINE ONLINE db195 STABLE

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.ASMNET1LSNR_ASM.lsnr(ora.asmgroup)

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.CRS.dg(ora.asmgroup)

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE db195 STABLE

ora.asm(ora.asmgroup)

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE db195 Started,STABLE

3 OFFLINE OFFLINE STABLE

ora.asmnet1.asmnetwork(ora.asmgroup)

1 ONLINE OFFLINE STABLE

2 ONLINE ONLINE db195 STABLE

3 OFFLINE OFFLINE STABLE

ora.cvu

1 ONLINE ONLINE db195 STABLE

ora.db193.vip

1 ONLINE INTERMEDIATE db195 FAILED OVER,STABLE

ora.db195.vip

1 ONLINE ONLINE db195 STABLE

ora.qosmserver

1 ONLINE ONLINE db195 STABLE

ora.scan1.vip

1 ONLINE ONLINE db195 STABLE

--------------------------------------------------------------------------------

附:集群日志位置:

--如果忘记,可以使用adrci查看日志位置

[grid@db195 trace]$ pwd

/u01/app/grid/diag/crs/db195/crs/trace

[grid@db195 trace]$ tail -20f alert.log

2019-07-31 09:13:53.813 [CRSD(9156)]CRS-8500: Oracle Clusterware CRSD process is starting with operating system process ID 9156

2019-07-31 09:14:05.943 [CRSD(9156)]CRS-1012: The OCR service started on node db195.

2019-07-31 09:14:06.585 [CRSD(9156)]CRS-1201: CRSD started on node db195.

2019-07-31 09:14:15.787 [ORAAGENT(9824)]CRS-8500: Oracle Clusterware ORAAGENT process is starting with operating system process ID 9824

2019-07-31 09:14:16.576 [ORAROOTAGENT(9856)]CRS-8500: Oracle Clusterware ORAROOTAGENT process is starting with operating system process ID 9856

2019-07-31 09:14:28.516 [ORAAGENT(10272)]CRS-8500: Oracle Clusterware ORAAGENT process is starting with operating system process ID 10272

2019-07-31 09:21:07.409 [OCTSSD(8378)]CRS-2407: The new Cluster Time Synchronization Service reference node is host db195.

2019-07-31 09:21:10.569 [OCSSD(7062)]CRS-1625: Node db193, number 1, was shut down

2019-07-31 09:21:10.948 [OCSSD(7062)]CRS-1601: CSSD Reconfiguration complete. Active nodes are db195 .

2019-07-31 09:21:11.055 [CRSD(9156)]CRS-5504: Node down event reported for node 'db193'.

2019-07-31 09:21:11.292 [CRSD(9156)]CRS-2773: Server 'db193' has been removed from pool 'Free'.

2019-07-31 09:22:25.944 [OLOGGERD(21377)]CRS-8500: Oracle Clusterware OLOGGERD process is starting with operating system process ID 21377

2019-07-31 09:23:41.207 [OCSSD(7062)]CRS-1601: CSSD Reconfiguration complete. Active nodes are db193 db195 .

[grid@db195 trace]$ tail -5f ocssd.trc

2019-07-31 09:35:40.732 : CSSD:527664896: [ INFO] clssgmDiscEndpcl: initiating gipcDestroy 0x27553

2019-07-31 09:35:40.732 : CSSD:527664896: [ INFO] clssgmDiscEndpcl: completed gipcDestroy 0x27553

2019-07-31 09:35:42.136 : CSSD:527664896: [ INFO] : Processing member data change type 1, size 4 for group HB+ASM, memberID 17:2:2

2019-07-31 09:35:42.136 : CSSD:527664896: [ INFO] : Sending member data change to GMP for group HB+ASM, memberID 17:2:2

2019-07-31 09:35:42.138 : CSSD:1010091776: [ INFO] clssgmpcMemberDataUpdt: grockName HB+ASM memberID 17:2:2, datatype 1 datasize 4

至此,19c的GI配置已全部完成。